What Is MCP in AI? A Simple Guide to Connecting and Automating Your LLM

Learn how MCP (Multi-Agent Collaboration Protocol) lets AI like ChatGPT and Anthropic access live data, call tools, and automate tasks - all through one unified interface.

Why AI Agents Need MCP to Act

We make use of AI every day to answer queries, yet it can’t carry out direct actions. We need AI to collaborate with other technologies in order to perform tasks.

The Multi-Agent Collaboration Protocol (MCP) provides a single, standard interface to plug AI into external systems. In this article, we’ll explore how MCP works and why it matters to you.

What Is an MCP in AI?

The Multi-Agent Collaboration Protocol (MCP) lets AI systems connect to tools and data through one standard interface. MCP defines how AI “clients” and “servers” communicate using JSON-RPC over HTTP. You avoid writing custom code for each service—just plug any MCP-enabled tool into your AI environment.

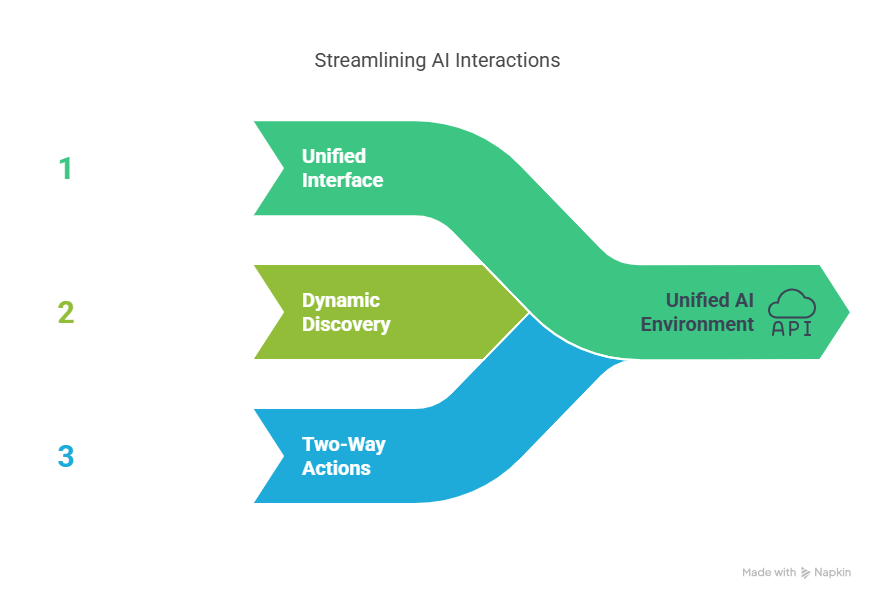

Core MCP Benefits

- Unified interface: One protocol to access all your tools.

- Dynamic discovery: Agents find services at runtime.

- Two-way actions: Agents fetch data and trigger operations.

Pro Tip:

Register each tool once as an MCP server. Then any AI client can use all tools without extra integration work.

For a deeper dive into MCP tools, see the official documentation: Anthropic MCP Docs.

What Is MCP for Large Language Models (LLMs)?

MCP lets large language models (LLMs) like ChatGPT use external tools and fresh data. It defines a simple interface between an LLM (“client”) and any MCP-enabled service (“server”). As an MCP client, an LLM can discover available tools and invoke them on demand. This gives you real-time context and action capabilities beyond training data.

Key MCP Capabilities for LLMs

- Live data fetch: Query databases, web APIs, or file systems on demand.

- Function calls: Execute scripts or operations mid-conversation.

- Context injection: Pull fresh data into prompts without bloating tokens.

Figure: An LLM client discovers and calls multiple MCP servers for data and actions.

Pro Tip:

Run a local MCP server when developing LLM integrations. Test tool calls without exposing production data.

For more on getting started, check the protocol introduction: MCP Introduction.

Can ChatGPT Use MCP?

Yes. ChatGPT now supports MCP, letting it call external tools directly. In early 2025, OpenAI added MCP support to its Agents SDK and desktop app.

With MCP enabled, ChatGPT can query live databases, run scripts, and update files.

Point your ChatGPT agent at your MCP servers, and it discovers and uses your tools automatically.

Key ChatGPT MCP Features

- Agents SDK: Write and register MCP servers to extend ChatGPT’s abilities.

- Desktop app: Enable MCP in settings to connect tools locally.

- API support: Call MCP-enabled services in your own applications.

Figure: Enabling MCP tools in ChatGPT’s settings and invoking them in the chat.

Pro Tip:

Enable “Developer Mode” in ChatGPT’s desktop app to test your MCP servers before production.

For setup instructions, read the announcement: OpenAI Adds MCP Support.

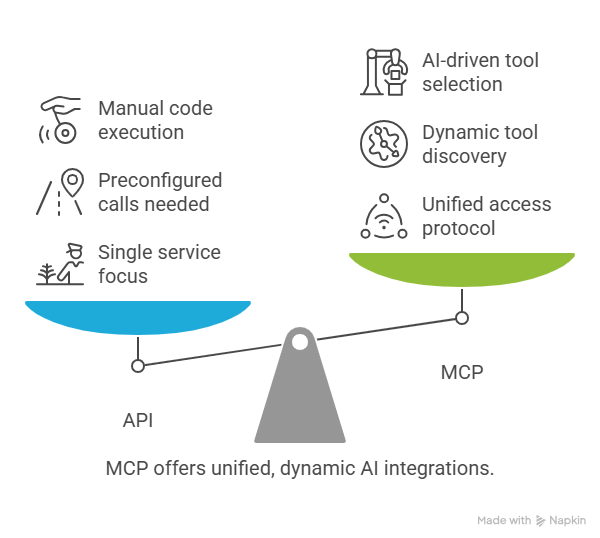

What Is the Difference Between an API and MCP?

An API (Application Programming Interface) defines how one application calls one service. MCP unifies access to many services under a single protocol. APIs force you to write custom code for each tool; MCP needs only one connector per tool. This shift makes AI integrations faster and more reliable.

Key Contrasts

- Scope:

- API: Targets one service at a time.

- MCP: Targets all your tools through one protocol.

- Discoverability:

- API: Requires preconfigured calls.

- MCP: Lets AI find available tools at runtime.

- Autonomy:

- API: Runs when you code it.

- MCP: Lets AI choose and invoke tools as needed.

- Statefulness:

- API: Handles single, stateless requests.

- MCP: Supports multi-step, stateful conversations.

Pro Tip:

When you add a new service, register it once as an MCP server. Your AI agent gains access immediately—no extra code.

Learn more on the protocol: Model Context Protocol (Wikipedia).

What Are MCP AI Tools?

MCP AI tools are software components that implement the Multi-Agent Collaboration Protocol. Each tool runs as an MCP “server,” exposing functions and data through the protocol. AI agents act as MCP “clients,” discovering and invoking these tools on demand.

Popular MCP AI Tools

- Anthropic’s MCP

Open-source reference server and SDK with connectors for files, databases, messaging, and more. - Google A2A (Agent-to-Agent Protocol)

Standard for AI agent collaboration and task delegation. - IBM ACP (Agent Communication Protocol)

Optimized for local, real-time agent collaboration in edge or IoT settings. - Community Connectors

GitHub-hosted MCP servers for CRMs, analytics, CI/CD, and specialized APIs.

Pro Tip:

Browse community docs on GitHub: MCP Documentation Repo.

Anthropic’s MCP AI Tool

Anthropic’s MCP is the flagship, open-source implementation of the Model Context Protocol. It provides SDKs and a CLI for standing up MCP servers that wrap your data sources and services. Out of the box, it includes connectors for file storage, databases, messaging apps, and more.

Key Features

- Multi-language SDKs: Build MCP servers in Python, JavaScript, or TypeScript.

- Built-in Connectors: Adapters for Google Drive, Slack, Git, SQL, and others.

- Auto-Generated Manifests: Define tool capabilities once; the SDK creates JSON descriptors for AI discovery.

- Model-Agnostic: Use with any LLM or agent supporting MCP.

Pro Tip:

Use mcp init to scaffold a new server and connector. No manual JSON editing required.

For detailed setup, visit: Anthropic News: Introducing MCP.

MCP AI vs. API

Applications use APIs to connect to one service at a time. AI agents use MCP to connect to many services through a single protocol. This cuts development work and boosts flexibility.

1. Integration Effort

- API: Maintain separate code per service.

- MCP: One MCP server per tool; AI clients gain instant access.

2. Runtime Discovery

- API: Endpoints preconfigured in code.

- MCP: Agents discover available tools at runtime.

3. Workflow Flexibility

- API: Fixed request–response per call.

- MCP: Supports multi-step, stateful conversations and dynamic calls.

4. Maintenance Overhead

- API: Update each integration when services change.

- MCP: Update only the MCP server; all agents benefit.

Pro Tip:

Register new services once as MCP servers. Your AI agents can use them immediately.

For more comparison details, see: TechCrunch on MCP Adoption.

What Is MCP Automation?

MCP automation lets AI agents perform end-to-end tasks across your systems. You define triggers, and the AI agent calls MCP tools to run workflows without manual steps. Routine jobs—like ticketing, reporting, or device checks—move off your to-do list and into AI hands.

How MCP Drives Automation

- Event triggers: AI watches data streams or schedules via MCP servers.

- Action chains: On trigger, AI invokes a series of MCP tools in order.

- Stateful flows: The agent tracks progress and handles errors mid-workflow.

Pro Tip:

Define clear success criteria and guardrails. Let the AI retry failures or alert you when it needs help.

For a real-world example in AV, see: Xyte’s MCP Server for AV.

Next Steps

You’ve seen how MCP unifies tool access, lets AI fetch live data, invoke actions, and manage stateful workflows. This protocol slashes integration effort, boosts flexibility, and unlocks true AI-driven automation. By adopting MCP, you transform static language models into proactive agents that think and do.

Next Steps to Try MCP

- Stand up an MCP server

Scaffold a connector with Anthropic’s SDK. Wrap one data source or API as an MCP server. - Connect your LLM or agent

Point ChatGPT or your custom agent to the server. Enable discovery and simple tool calls. - Test a simple workflow

Write a prompt that calls your MCP tool. Verify data retrieval and action execution. - Secure and monitor

Apply OAuth or API keys. Log all tool invocations and set retry or alert rules.

Pro Tip:

Start small: expose a single tool, run a demo workflow, then scale up. Build confidence and best practices early.

Ready to build? Check the official docs: